I built an AI to beat Alex in Rock Paper Scissors

In an era where AI/ML/Computer Vision shines and takes over both the simple and complex aspects of life, an important question arises: How competent are these artificial intelligences? Can they solve problems faster than us? Well, sure… but can they win at rock-paper-scissors against a human? That's the intriguing question we'll delve into in this blog post.

Firstly, let's talk about implementation. I'll cover the tools we're using so no one accuses me of cheating later on ;).

Implementation

Firstly, the tools at play. We're using the libraries OpenCV and Mediapipe. OpenCV is a Python computer vision library, and Mediapipe is an open-source, cross-platform, customizable ML solution for live and streaming media.

# Install OpenCV for Python

$ pip3 install opencv-python

# Install MediaPipe for Python

$ pip3 install mediapipeimport cv2

import mediapipe as mp

mp_drawing = mp.solutions.drawing_utils

mp_drawing_styles = mp.solutions.drawing_styles

mp_holistic = mp.solutions.holistic

# For webcam input:

cap = cv2.VideoCapture(0)

previous_time = 0

with mp_holistic.Holistic(min_detection_confidence=0.5, min_tracking_confidence=0.5) as holistic:

while cap.isOpened():

success, image = cap.read()

if not success:

print("Ignoring empty camera frame.")

break

image.flags.writeable = False

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

results = holistic.process(image)

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# Draw Face Landmarks

mp_drawing.draw_landmarks(

image,

results.face_landmarks,

mp_holistic.FACEMESH_CONTOURS,

mp_drawing.DrawingSpec(

color=(255,0,255),

thickness=1,

circle_radius=1

),

mp_drawing.DrawingSpec(

color=(0,255,255),

thickness=1,

circle_radius=1

)

)

# Draw Hand Landmarks

mp_drawing.draw_landmarks(

image,

results.right_hand_landmarks,

mp_holistic.HAND_CONNECTIONS

)

mp_drawing.draw_landmarks(

image,

results.left_hand_landmarks,

mp_holistic.HAND_CONNECTIONS

)

frame = cv2.flip(image, 1)

cv2.imshow('frame', frame)

if cv2.waitKey(5) & 0xFF == ord('q'):

break

cap.release()

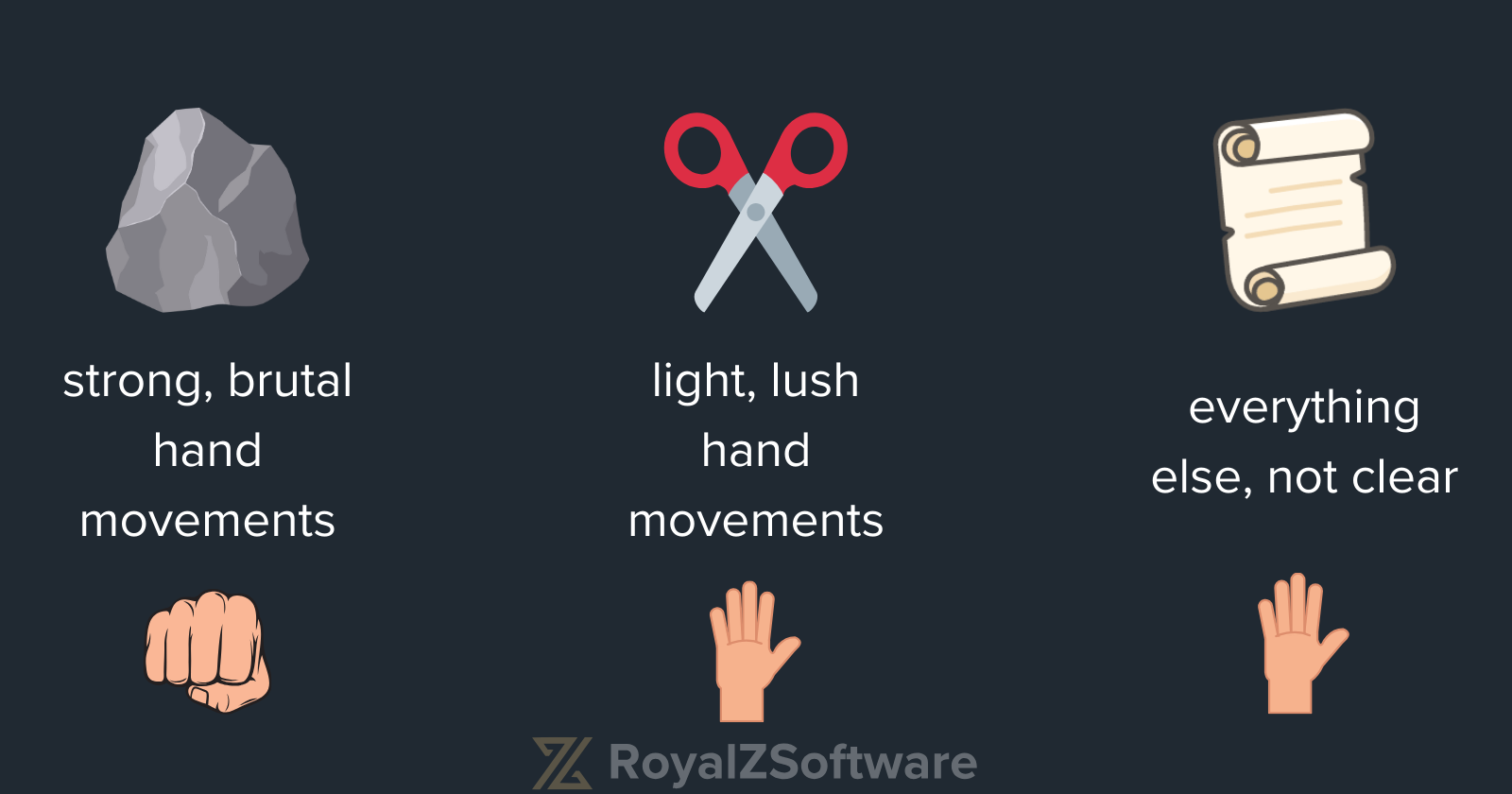

cv2.destroyAllWindows()Unlike a machine, a human usually gives away subtle hints. By reading micro-expressions, we can identify certain tendencies of a person and predict their next move. Initially, we need an association between gestures, facial expressions, and their corresponding moves. One of the micro-expressions I've implemented is the clenched fist.

How often? How hard? How long? These are all indicators of a potential move. At least, that's what I think. Is the player nervous and hence choosing rock? Is the player relaxed, sticking with paper, or maybe it's scissors after all?

But how do we document such assertions? With math. Even basic math. Mediapipe provides us with facial landmarks that can be interpreted as vectors. Through these vectors, simple math operations like calculating the length between two vectors, the scalar product, the cross product, and of course, the angle, give us the desired results.

Identifying the rock. We can compare the vectors of the finger MCP and finger PIP. If the vector formed by MCP and PIP differs within a certain tolerance from the angle PIP and DIP, then both vectors don't align, indicating the finger isn't extended. Thus, if the index and middle fingers are extended while the others aren't we can assume the player is using scissors. All fingers extended implies Paper. Otherwise, Rock.

Naturally, we can use the same logic to check if the fist is clenched by examining the distance between the finger tip and MCP. The smaller the distance, the tighter the fist.

def clutched_or_relaxed(hand_landmarks):

if hand_landmarks is not None:

landmarks = hand_landmarks.landmark

pinky_tip = [landmarks[20].x, landmarks[20].y, 0]

ring_finger_tip = [landmarks[16].x, landmarks[16].y, 0]

middle_finger_tip = [landmarks[12].x, landmarks[12].y, 0]

index_finger_tip = [landmarks[8].x, landmarks[8].y, 0]

index_middle_finger = vector_length(index_finger_tip, middle_finger_tip)

middle_ring_finger = vector_length(middle_finger_tip, ring_finger_tip)

pinky_ring = vector_length(ring_finger_tip, pinky_tip)

min_threshold = 0.03

max_threshold = 0.06

if all([ round(i,3) <= min_threshold for i in [index_middle_finger, middle_ring_finger, pinky_ring]]):

return 'close'

elif all([ round(i,3) >= max_threshold for i in [index_middle_finger, middle_ring_finger, pinky_ring]]):

return 'open'However, the clenched fist is just one indicator. We can also track and interpret mouth movements and eye behavior using the same principle. Yet, these measures are only moderately significant. Thus, we use them as a weighting factor.

The Win-Lose-Trade Algorithm

We aim to maximize wins and minimize losses. For this, we need a Functional Win-Lose-Trade Algorithm. There are studies hinting at a specific strategy. When a player picks a winning move, they tend to repeat that choice in the next round. On the other hand, after a loss, the player is more likely to switch to the next move in the sequence of Rock-Paper-Scissors

We implement this Win-Lose-Trade Algorithm but add a weighting factor. We have a counter function that counts specific indices and evaluates based on frequency and sequence. How often a move X occurred and how long ago Y. Since we implement the game with a given time for the user to decide their move, as the player approaches the deadline, their move becomes more resolute. The frequency also provides a certain level of confidence. For instance, if the player closed their hand 10 times in the last 15 seconds and held it closed for 5 seconds, we'd weigh toward Rock.

Code implementation

def determine_rock_paper_scissors_mouth_eye_hand(states):

open = 0

close = 0

max_duration_open = 0

max_duration_close = 0

current_max_duration = 0

current_state = None

for state in states:

if state == 'open':

open += 1

if current_state != 'open':

current_state = 'open'

current_max_duration = 0

max_duration_open = max(max_duration_open, current_max_duration)

else:

current_max_duration += 1

else:

close += 1

if current_state != 'close':

current_state = 'close'

current_max_duration = 0

max_duration_open = max(max_duration_close, current_max_duration)

else:

current_max_duration += 1

if max_duration_open > max_duration_close and open > close:

return "Paper"

elif max_duration_open < max_duration_close and open <= close:

return "Rock"

else:

return "Scissors"We can now choose a definitive move using the Win-Lose-Trade Algorithm and the weighting, with the weighting serving as assistance without overriding the Win-Lose-Trade Algorithm. For instance, 1. Win-Lose-Trade says the next move is Rock, the weighting confirms this by 70%, then Rock is chosen. 2. Win-Lose-Trade suggests the next move is Paper, the weighting disputes this by 80%, but Paper is still chosen until a 3-strike system, where weighting takes precedence.

About the Game

Now that we have everything ready, we can dive into the game. The user has the opportunity to choose a move within the first 30 seconds; after that, the move is held so the camera can recognize it. With the information gathered in the 30 seconds before the move was finalized, we make a prediction, which the player sees. Thus, one round takes 30 seconds. As there's never direct insight into the user's move, this is a completely legitimate game.

Conclusion: IS THE NPC BETTER ?

Instead of looking at the facts lets ask the poor victim Alex who had to play against the Rock-Paper-Scissors NPC.

Me: "Alex, how did it feel to play against an NPC"

Alex: "Ehm, i found it cool"

%[https://www.youtube.com/watch?v=YsZ1OhBmcAc]

Demo (a work in progress):